|

PROJECT_NAME

The name of the project. Required.

PROJECT_DISPLAYNAME

The display name of the project. May be empty.

PROJECT_DESCRIPTION

The description of the project. May be empty.

PROJECT_ADMIN_USER

The user name of the administrating user.

PROJECT_REQUESTING_USER

The user name of the requesting user.

Access to the API is granted to developers with the

self-provisioner

role and the

self-provisioners

cluster role binding. This role is available to all authenticated developers by default.

2.3.2. Modifying the template for new projects

As a cluster administrator, you can modify the default project template so that new projects are created using your custom requirements.

To create your own custom project template:

Procedure

-

Log in as a user with

cluster-admin

privileges.

Generate the default project template:

$ oc adm create-bootstrap-project-template -o yaml > template.yaml

-

Use a text editor to modify the generated

template.yaml

file by adding objects or modifying existing objects.

The project template must be created in the

openshift-config

namespace. Load your modified template:

$ oc create -f template.yaml -n openshift-config

-

Edit the project configuration resource using the web console or CLI.

Using the web console:

Navigate to the

Administration

→

Cluster Settings

page.

Click

Configuration

to view all configuration resources.

Find the entry for

Project

and click

Edit YAML

.

Using the CLI:

Edit the

project.config.openshift.io/cluster

resource:

$ oc edit project.config.openshift.io/cluster

Update the

spec

section to include the

projectRequestTemplate

and

name

parameters, and set the name of your uploaded project template. The default name is

project-request

.

2.3.3. Disabling project self-provisioning

You can prevent an authenticated user group from self-provisioning new projects.

Procedure

-

Log in as a user with

cluster-admin

privileges.

View the

self-provisioners

cluster role binding usage by running the following command:

$ oc describe clusterrolebinding.rbac self-provisioners

-

If the

self-provisioners

cluster role binding binds the

self-provisioner

role to more users, groups, or service accounts than the

system:authenticated:oauth

group, run the following command:

$ oc adm policy \

remove-cluster-role-from-group self-provisioner \

system:authenticated:oauth

Edit the

self-provisioners

cluster role binding to prevent automatic updates to the role. Automatic updates reset the cluster roles to the default state.

To update the role binding using the CLI:

Run the following command:

$ oc edit clusterrolebinding.rbac self-provisioners

In the displayed role binding, set the

rbac.authorization.kubernetes.io/autoupdate

parameter value to

false

, as shown in the following example:

apiVersion: authorization.openshift.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "false"

# ...

To update the role binding by using a single command:

$ oc patch clusterrolebinding.rbac self-provisioners -p '{ "metadata": { "annotations": { "rbac.authorization.kubernetes.io/autoupdate": "false" } } }'

Log in as an authenticated user and verify that it can no longer self-provision a project:

$ oc new-project test

2.3.4. Customizing the project request message

When a developer or a service account that is unable to self-provision projects makes a project creation request using the web console or CLI, the following error message is returned by default:

You may not request a new project via this API.

Cluster administrators can customize this message. Consider updating it to provide further instructions on how to request a new project specific to your organization. For example:

To request a project, contact your system administrator at

projectname@example.com

.

To request a new project, fill out the project request form located at

https://internal.example.com/openshift-project-request

.

To customize the project request message:

Procedure

-

Edit the project configuration resource using the web console or CLI.

Using the web console:

Navigate to the

Administration

→

Cluster Settings

page.

Click

Configuration

to view all configuration resources.

Find the entry for

Project

and click

Edit YAML

.

Using the CLI:

Log in as a user with

cluster-admin

privileges.

Edit the

project.config.openshift.io/cluster

resource:

$ oc edit project.config.openshift.io/cluster

Update the

spec

section to include the

projectRequestMessage

parameter and set the value to your custom message:

After you save your changes, attempt to create a new project as a developer or service account that is unable to self-provision projects to verify that your changes were successfully applied.

Chapter 3. Creating applications

3.1. Creating applications by using the Developer perspective

The

Developer

perspective in the web console provides you the following options from the

+Add

view to create applications and associated services and deploy them on OpenShift Container Platform:

Getting started resources

: Use these resources to help you get started with Developer Console. You can choose to hide the header using the

Options

menu

Creating applications using samples

: Use existing code samples to get started with creating applications on the OpenShift Container Platform.

Build with guided documentation

: Follow the guided documentation to build applications and familiarize yourself with key concepts and terminologies.

Explore new developer features

: Explore the new features and resources within the

Developer

perspective.

Developer catalog

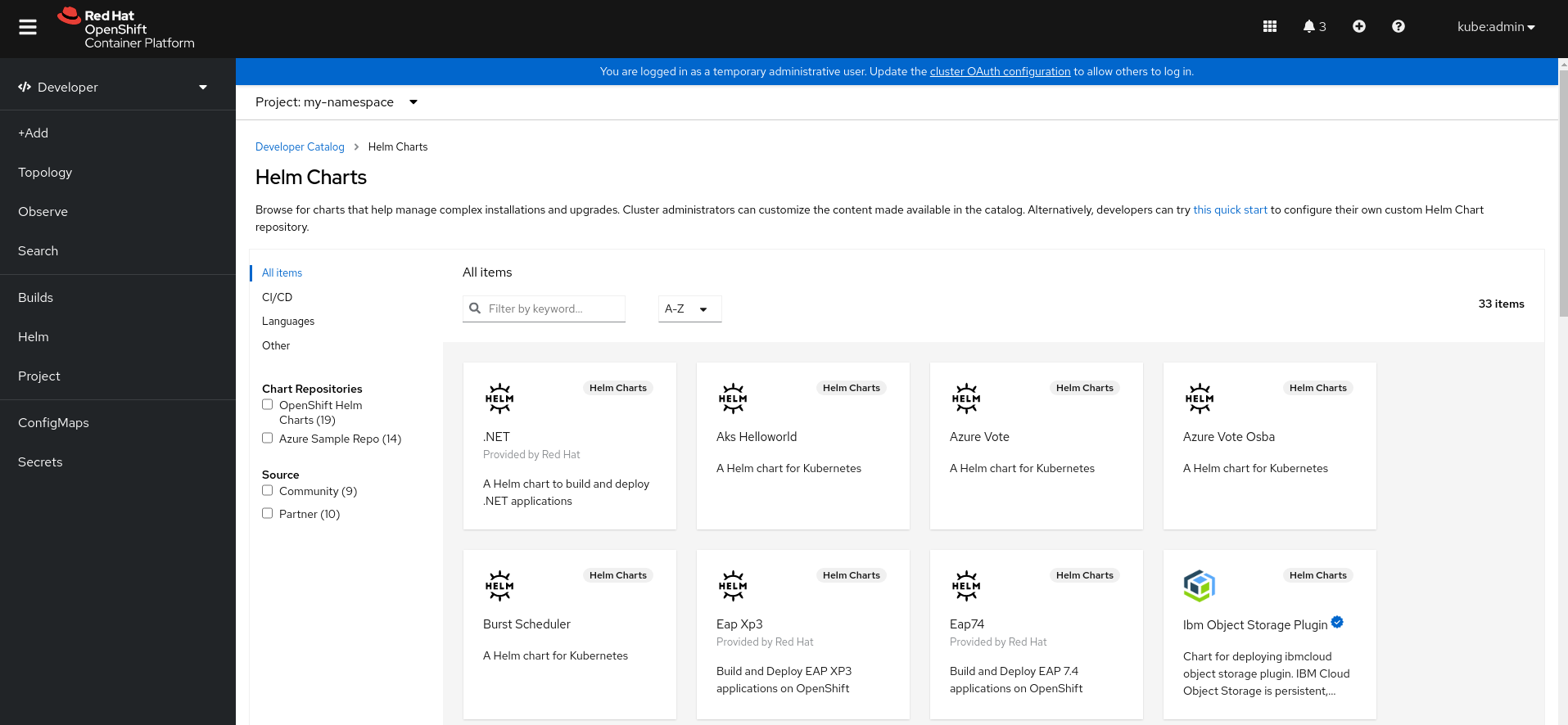

: Explore the Developer Catalog to select the required applications, services, or source to image builders, and then add it to your project.

All Services

: Browse the catalog to discover services across OpenShift Container Platform.

Database

: Select the required database service and add it to your application.

Operator Backed

: Select and deploy the required Operator-managed service.

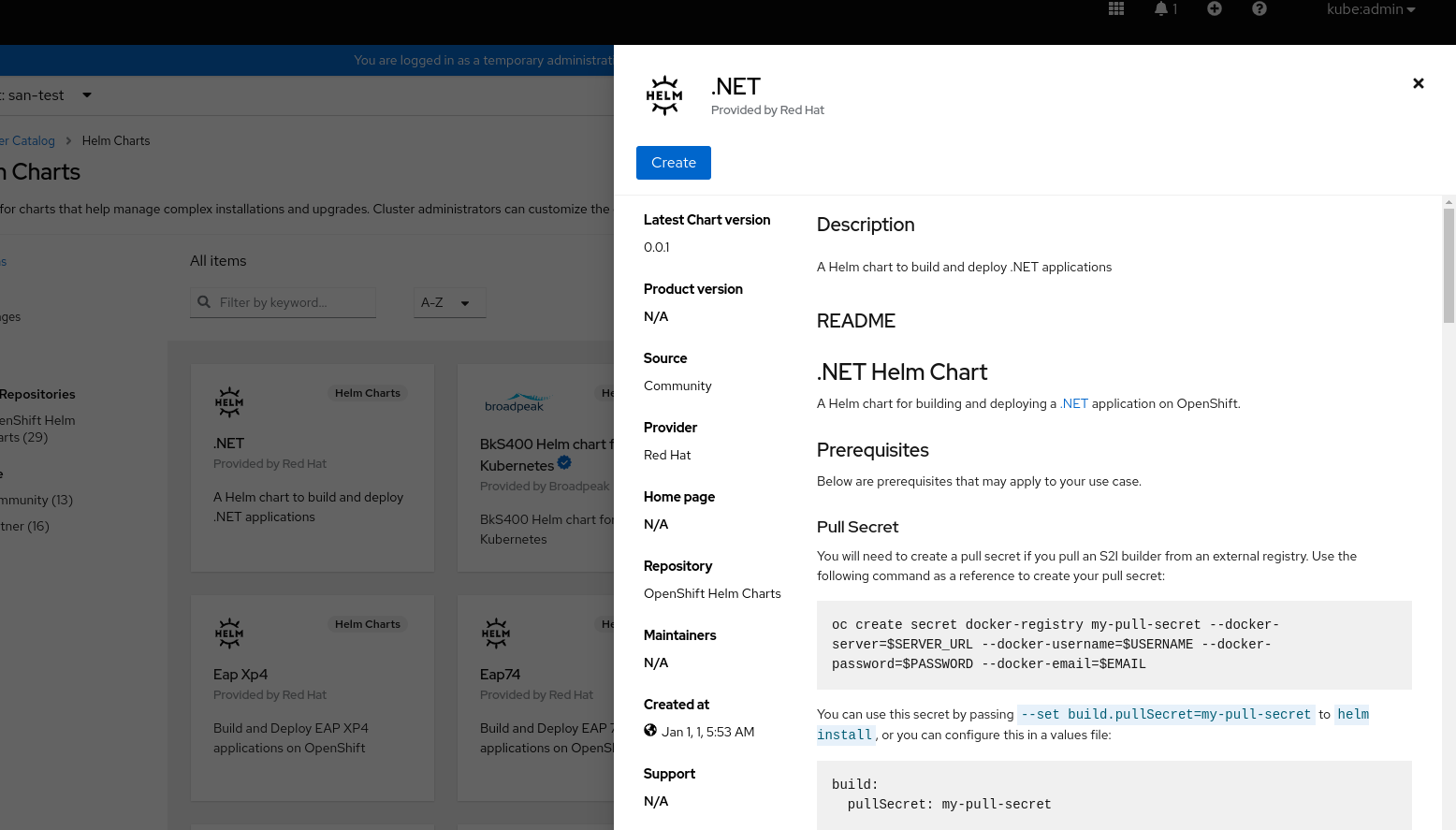

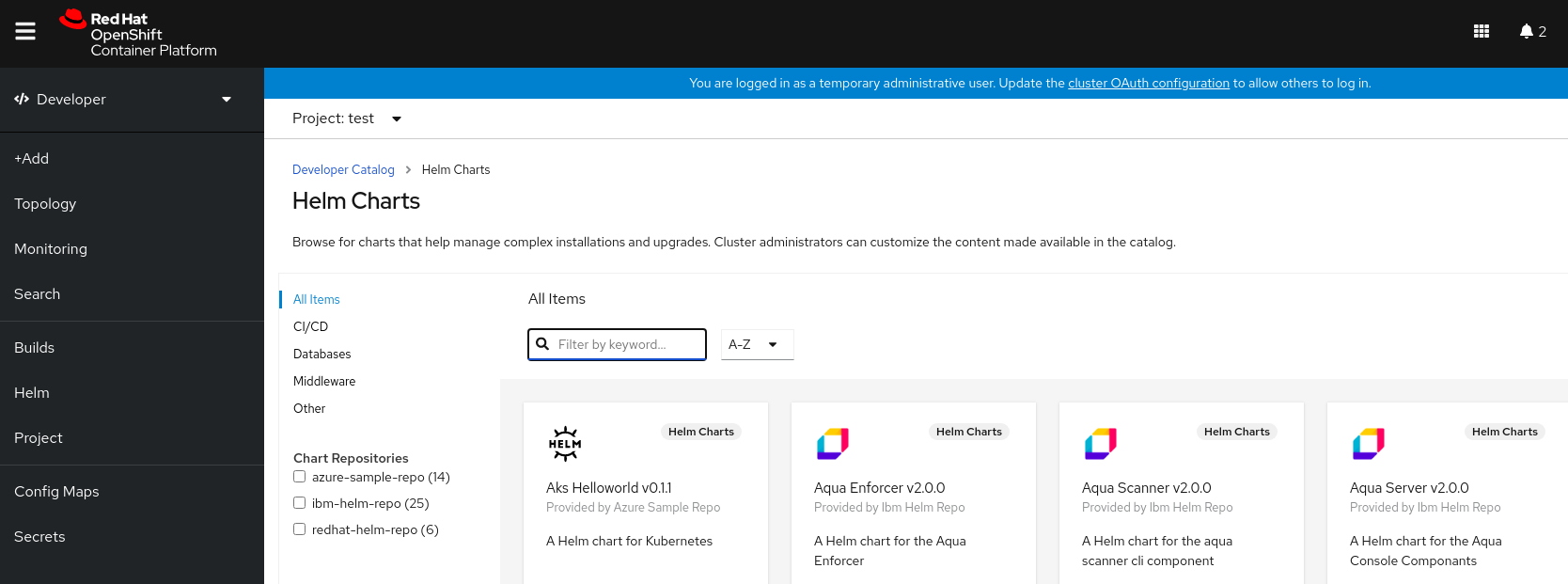

Helm chart

: Select the required Helm chart to simplify deployment of applications and services.

Devfile

: Select a devfile from the

Devfile registry

to declaratively define a development environment.

Event Source

: Select an event source to register interest in a class of events from a particular system.

The Managed services option is also available if the RHOAS Operator is installed.

Git repository

: Import an existing codebase, Devfile, or Dockerfile from your Git repository using the

From Git

,

From Devfile

, or

From Dockerfile

options respectively, to build and deploy an application on OpenShift Container Platform.

Container images

: Use existing images from an image stream or registry to deploy it on to the OpenShift Container Platform.

Pipelines

: Use Tekton pipeline to create CI/CD pipelines for your software delivery process on the OpenShift Container Platform.

Serverless

: Explore the

Serverless

options to create, build, and deploy stateless and serverless applications on the OpenShift Container Platform.

Channel

: Create a Knative channel to create an event forwarding and persistence layer with in-memory and reliable implementations.

Samples

: Explore the available sample applications to create, build, and deploy an application quickly.

Quick Starts

: Explore the quick start options to create, import, and run applications with step-by-step instructions and tasks.

From Local Machine

: Explore the

From Local Machine

tile to import or upload files on your local machine for building and deploying applications easily.

Import YAML

: Upload a YAML file to create and define resources for building and deploying applications.

Upload JAR file

: Upload a JAR file to build and deploy Java applications.

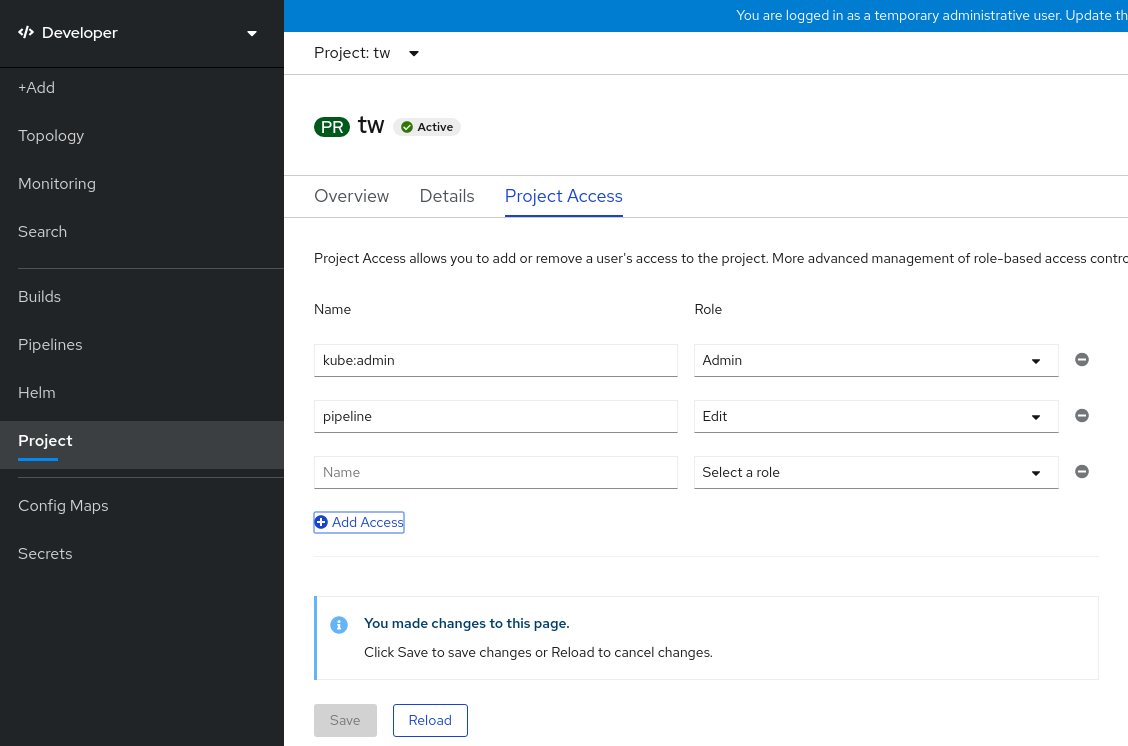

Share my Project

: Use this option to add or remove users to a project and provide accessibility options to them.

Helm Chart repositories

: Use this option to add Helm Chart repositories in a namespace.

Re-ordering of resources

: Use these resources to re-order pinned resources added to your navigation pane. The drag-and-drop icon is displayed on the left side of the pinned resource when you hover over it in the navigation pane. The dragged resource can be dropped only in the section where it resides.

Note that certain options, such as

Pipelines

,

Event Source

, and

Import Virtual Machines

, are displayed only when the

OpenShift Pipelines Operator

,

OpenShift Serverless Operator

, and

OpenShift Virtualization Operator

are installed, respectively.

3.1.2. Creating sample applications

You can use the sample applications in the

+Add

flow of the

Developer

perspective to create, build, and deploy applications quickly.

Prerequisites

-

You have logged in to the OpenShift Container Platform web console and are in the

Developer

perspective.

Procedure

-

In the

+Add

view, click the

Samples

tile to see the

Samples

page.

On the

Samples

page, select one of the available sample applications to see the

Create Sample Application

form.

In the

Create Sample Application Form

:

In the

Name

field, the deployment name is displayed by default. You can modify this name as required.

In the

Builder Image Version

, a builder image is selected by default. You can modify this image version by using the

Builder Image Version

drop-down list.

A sample Git repository URL is added by default.

Click

Create

to create the sample application. The build status of the sample application is displayed on the

Topology

view. After the sample application is created, you can see the deployment added to the application.

3.1.3. Creating applications by using Quick Starts

The

Quick Starts

page shows you how to create, import, and run applications on OpenShift Container Platform, with step-by-step instructions and tasks.

Prerequisites

-

You have logged in to the OpenShift Container Platform web console and are in the

Developer

perspective.

Procedure

-

In the

+Add

view, click the

Getting Started resources

→

Build with guided documentation

→

View all quick starts

link to view the

Quick Starts

page.

In the

Quick Starts

page, click the tile for the quick start that you want to use.

Click

Start

to begin the quick start.

Perform the steps that are displayed.

3.1.4. Importing a codebase from Git to create an application

You can use the

Developer

perspective to create, build, and deploy an application on OpenShift Container Platform using an existing codebase in GitHub.

The following procedure walks you through the

From Git

option in the

Developer

perspective to create an application.

Procedure

-

In the

+Add

view, click

From Git

in the

Git Repository

tile to see the

Import from git

form.

In the

Git

section, enter the Git repository URL for the codebase you want to use to create an application. For example, enter the URL of this sample Node.js application

https://github.com/sclorg/nodejs-ex

. The URL is then validated.

Optional: You can click

Show Advanced Git Options

to add details such as:

Git Reference

to point to code in a specific branch, tag, or commit to be used to build the application.

Context Dir

to specify the subdirectory for the application source code you want to use to build the application.

Source Secret

to create a

Secret Name

with credentials for pulling your source code from a private repository.

Optional: You can import a

Devfile

, a

Dockerfile

,

Builder Image

, or a

Serverless Function

through your Git repository to further customize your deployment.

If your Git repository contains a

Devfile

, a

Dockerfile

, a

Builder Image

, or a

func.yaml

, it is automatically detected and populated on the respective path fields.

If a

Devfile

, a

Dockerfile

, or a

Builder Image

are detected in the same repository, the

Devfile

is selected by default.

If

func.yaml

is detected in the Git repository, the

Import Strategy

changes to

Serverless Function

.

Alternatively, you can create a serverless function by clicking

Create Serverless function

in the

+Add

view using the Git repository URL.

To edit the file import type and select a different strategy, click

Edit import strategy

option.

If multiple

Devfiles

, a

Dockerfiles

, or a

Builder Images

are detected, to import a specific instance, specify the respective paths relative to the context directory.

After the Git URL is validated, the recommended builder image is selected and marked with a star. If the builder image is not auto-detected, select a builder image. For the

https://github.com/sclorg/nodejs-ex

Git URL, by default the Node.js builder image is selected.

Optional: Use the

Builder Image Version

drop-down to specify a version.

Optional: Use the

Edit import strategy

to select a different strategy.

Optional: For the Node.js builder image, use the

Run command

field to override the command to run the application.

In the

General

section:

In the

Application

field, enter a unique name for the application grouping, for example,

myapp

. Ensure that the application name is unique in a namespace.

The

Name

field to identify the resources created for this application is automatically populated based on the Git repository URL if there are no existing applications. If there are existing applications, you can choose to deploy the component within an existing application, create a new application, or keep the component unassigned.

The resource name must be unique in a namespace. Modify the resource name if you get an error.

In the

Resources

section, select:

Deployment

, to create an application in plain Kubernetes style.

Deployment Config

, to create an OpenShift Container Platform style application.

Serverless Deployment

, to create a Knative service.

To set the default resource preference for importing an application, go to

User Preferences

→

Applications

→

Resource type

field. The

Serverless Deployment

option is displayed in the

Import from Git

form only if the OpenShift Serverless Operator is installed in your cluster. The

Resources

section is not available while creating a serverless function. For further details, refer to the OpenShift Serverless documentation.

In the

Pipelines

section, select

Add Pipeline

, and then click

Show Pipeline Visualization

to see the pipeline for the application. A default pipeline is selected, but you can choose the pipeline you want from the list of available pipelines for the application.

The

Add pipeline

checkbox is checked and

Configure PAC

is selected by default if the following criterias are fulfilled:

Pipeline operator is installed

pipelines-as-code

is enabled

.tekton

directory is detected in the Git repository

Add a webhook to your repository. If

Configure PAC

is checked and the GitHub App is set up, you can see the

Use GitHub App

and

Setup a webhook

options. If GitHub App is not set up, you can only see the

Setup a webhook

option:

Go to

Settings

→

Webhooks

and click

Add webhook

.

Set the

Payload URL

to the Pipelines as Code controller public URL.

Select the content type as

application/json

.

Add a webhook secret and note it in an alternate location. With

openssl

installed on your local machine, generate a random secret.

Click

Let me select individual events

and select these events:

Commit comments

,

Issue comments

,

Pull request

, and

Pushes

.

Click

Add webhook

.

Optional: In the

Advanced Options

section, the

Target port

and the

Create a route to the application

is selected by default so that you can access your application using a publicly available URL.

If your application does not expose its data on the default public port, 80, clear the check box, and set the target port number you want to expose.

Optional: You can use the following advanced options to further customize your application:

-

Routing

-

By clicking the

Routing

link, you can perform the following actions:

Customize the hostname for the route.

Specify the path the router watches.

Select the target port for the traffic from the drop-down list.

Secure your route by selecting the

Secure Route

check box. Select the required TLS termination type and set a policy for insecure traffic from the respective drop-down lists.

For serverless applications, the Knative service manages all the routing options above. However, you can customize the target port for traffic, if required. If the target port is not specified, the default port of

8080

is used.

-

Domain mapping

-

If you are creating a

Serverless Deployment

, you can add a custom domain mapping to the Knative service during creation.

In the

Advanced options

section, click

Show advanced Routing options

.

If the domain mapping CR that you want to map to the service already exists, you can select it from the

Domain mapping

drop-down menu.

If you want to create a new domain mapping CR, type the domain name into the box, and select the

Create

option. For example, if you type in

example.com

, the

Create

option is

Create "example.com"

.

Health Checks

Click the

Health Checks

link to add Readiness, Liveness, and Startup probes to your application. All the probes have prepopulated default data; you can add the probes with the default data or customize it as required.

To customize the health probes:

Click

Add Readiness Probe

, if required, modify the parameters to check if the container is ready to handle requests, and select the check mark to add the probe.

Click

Add Liveness Probe

, if required, modify the parameters to check if a container is still running, and select the check mark to add the probe.

Click

Add Startup Probe

, if required, modify the parameters to check if the application within the container has started, and select the check mark to add the probe.

For each of the probes, you can specify the request type -

HTTP GET

,

Container Command

, or

TCP Socket

, from the drop-down list. The form changes as per the selected request type. You can then modify the default values for the other parameters, such as the success and failure thresholds for the probe, number of seconds before performing the first probe after the container starts, frequency of the probe, and the timeout value.

Build Configuration and Deployment

Click the

Build Configuration

and

Deployment

links to see the respective configuration options. Some options are selected by default; you can customize them further by adding the necessary triggers and environment variables.

For serverless applications, the

Deployment

option is not displayed as the Knative configuration resource maintains the desired state for your deployment instead of a

DeploymentConfig

resource.

-

Scaling

-

Click the

Scaling

link to define the number of pods or instances of the application you want to deploy initially.

If you are creating a serverless deployment, you can also configure the following settings:

Min Pods

determines the lower limit for the number of pods that must be running at any given time for a Knative service. This is also known as the

minScale

setting.

Max Pods

determines the upper limit for the number of pods that can be running at any given time for a Knative service. This is also known as the

maxScale

setting.

Concurrency target

determines the number of concurrent requests desired for each instance of the application at a given time.

Concurrency limit

determines the limit for the number of concurrent requests allowed for each instance of the application at a given time.

Concurrency utilization

determines the percentage of the concurrent requests limit that must be met before Knative scales up additional pods to handle additional traffic.

Autoscale window

defines the time window over which metrics are averaged to provide input for scaling decisions when the autoscaler is not in panic mode. A service is scaled-to-zero if no requests are received during this window. The default duration for the autoscale window is

60s

. This is also known as the stable window.

Resource Limit

Click the

Resource Limit

link to set the amount of

CPU

and

Memory

resources a container is guaranteed or allowed to use when running.

Labels

Click the

Labels

link to add custom labels to your application.

Click

Create

to create the application and a success notification is displayed. You can see the build status of the application in the

Topology

view.

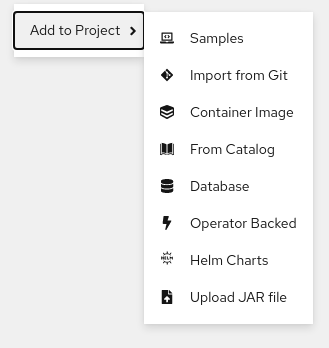

3.1.5. Deploying a Java application by uploading a JAR file

You can use the web console

Developer

perspective to upload a JAR file by using the following options:

Navigate to the

+Add

view of the

Developer

perspective, and click

Upload JAR file

in the

From Local Machine

tile. Browse and select your JAR file, or drag a JAR file to deploy your application.

Navigate to the

Topology

view and use the

Upload JAR file

option, or drag a JAR file to deploy your application.

Use the in-context menu in the

Topology

view, and then use the

Upload JAR file

option to upload your JAR file to deploy your application.

Prerequisites

-

The Cluster Samples Operator must be installed by a cluster administrator.

You have access to the OpenShift Container Platform web console and are in the

Developer

perspective.

Procedure

-

In the

Topology

view, right-click anywhere to view the

Add to Project

menu.

Hover over the

Add to Project

menu to see the menu options, and then select the

Upload JAR file

option to see the

Upload JAR file

form. Alternatively, you can drag the JAR file into the

Topology

view.

In the

JAR file

field, browse for the required JAR file on your local machine and upload it. Alternatively, you can drag the JAR file on to the field. A toast alert is displayed at the top right if an incompatible file type is dragged into the

Topology

view. A field error is displayed if an incompatible file type is dropped on the field in the upload form.

The runtime icon and builder image are selected by default. If a builder image is not auto-detected, select a builder image. If required, you can change the version using the

Builder Image Version

drop-down list.

Optional: In the

Application Name

field, enter a unique name for your application to use for resource labelling.

In the

Name

field, enter a unique component name for the associated resources.

Optional: Using the

Advanced options

→

Resource type

drop-down list, select a different resource type from the list of default resource types.

In the

Advanced options

menu, click

Create a Route to the Application

to configure a public URL for your deployed application.

Click

Create

to deploy the application. A toast notification is shown to notify you that the JAR file is being uploaded. The toast notification also includes a link to view the build logs.

If you attempt to close the browser tab while the build is running, a web alert is displayed.

After the JAR file is uploaded and the application is deployed, you can view the application in the

Topology

view.

3.1.6. Using the Devfile registry to access devfiles

You can use the devfiles in the

+Add

flow of the

Developer

perspective to create an application. The

+Add

flow provides a complete integration with the

devfile community registry

. A devfile is a portable YAML file that describes your development environment without needing to configure it from scratch. Using the

Devfile registry

, you can use a preconfigured devfile to create an application.

Procedure

-

Navigate to

Developer Perspective

→

+Add

→

Developer Catalog

→

All Services

. A list of all the available services in the

Developer Catalog

is displayed.

Under

Type

, click

Devfiles

to browse for devfiles that support a particular language or framework. Alternatively, you can use the keyword filter to search for a particular devfile using their name, tag, or description.

Click the devfile you want to use to create an application. The devfile tile displays the details of the devfile, including the name, description, provider, and the documentation of the devfile.

Click

Create

to create an application and view the application in the

Topology

view.

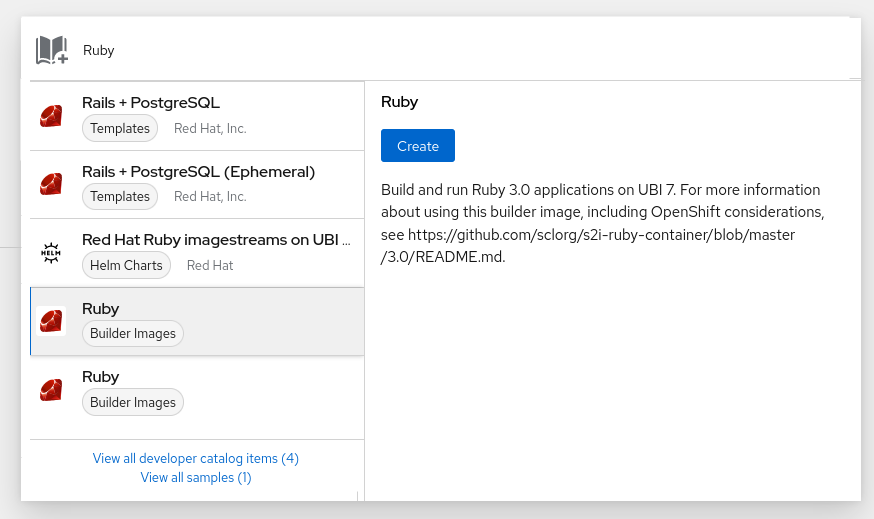

3.1.7. Using the Developer Catalog to add services or components to your application

You use the Developer Catalog to deploy applications and services based on Operator backed services such as Databases, Builder Images, and Helm Charts. The Developer Catalog contains a collection of application components, services, event sources, or source-to-image builders that you can add to your project. Cluster administrators can customize the content made available in the catalog.

Procedure

-

In the

Developer

perspective, navigate to the

+Add

view and from the

Developer Catalog

tile, click

All Services

to view all the available services in the

Developer Catalog

.

Under

All Services

, select the kind of service or the component you need to add to your project. For this example, select

Databases

to list all the database services and then click

MariaDB

to see the details for the service.

Click

Instantiate Template

to see an automatically populated template with details for the

MariaDB

service, and then click

Create

to create and view the MariaDB service in the

Topology

view.

3.1.8. Additional resources

3.2. Creating applications from installed Operators

Operators

are a method of packaging, deploying, and managing a Kubernetes application. You can create applications on OpenShift Container Platform using Operators that have been installed by a cluster administrator.

This guide walks developers through an example of creating applications from an installed Operator using the OpenShift Container Platform web console.

Additional resources

-

See the

Operators

guide for more on how Operators work and how the Operator Lifecycle Manager is integrated in OpenShift Container Platform.

3.2.1. Creating an etcd cluster using an Operator

This procedure walks through creating a new etcd cluster using the etcd Operator, managed by Operator Lifecycle Manager (OLM).

Prerequisites

-

Access to an OpenShift Container Platform 4.13 cluster.

The etcd Operator already installed cluster-wide by an administrator.

Procedure

-

Create a new project in the OpenShift Container Platform web console for this procedure. This example uses a project called

my-etcd

.

Navigate to the

Operators → Installed Operators

page. The Operators that have been installed to the cluster by the cluster administrator and are available for use are shown here as a list of cluster service versions (CSVs). CSVs are used to launch and manage the software provided by the Operator.

You can get this list from the CLI using:

$ oc get csv

On the

Installed Operators

page, click the etcd Operator to view more details and available actions.

As shown under

Provided APIs

, this Operator makes available three new resource types, including one for an

etcd Cluster

(the

EtcdCluster

resource). These objects work similar to the built-in native Kubernetes ones, such as

Deployment

or

ReplicaSet

, but contain logic specific to managing etcd.

Create a new etcd cluster:

In the

etcd Cluster

API box, click

Create instance

.

The next page allows you to make any modifications to the minimal starting template of an

EtcdCluster

object, such as the size of the cluster. For now, click

Create

to finalize. This triggers the Operator to start up the pods, services, and other components of the new etcd cluster.

Click the

example

etcd cluster, then click the

Resources

tab to see that your project now contains a number of resources created and configured automatically by the Operator.

Verify that a Kubernetes service has been created that allows you to access the database from other pods in your project.

All users with the

edit

role in a given project can create, manage, and delete application instances (an etcd cluster, in this example) managed by Operators that have already been created in the project, in a self-service manner, just like a cloud service. If you want to enable additional users with this ability, project administrators can add the role using the following command:

$ oc policy add-role-to-user edit <user> -n <target_project>

You now have an etcd cluster that will react to failures and rebalance data as pods become unhealthy or are migrated between nodes in the cluster. Most importantly, cluster administrators or developers with proper access can now easily use the database with their applications.

3.3. Creating applications by using the CLI

You can create an OpenShift Container Platform application from components that include source or binary code, images, and templates by using the OpenShift Container Platform CLI.

The set of objects created by

new-app

depends on the artifacts passed as input: source repositories, images, or templates.

3.3.1. Creating an application from source code

With the

new-app

command you can create applications from source code in a local or remote Git repository.

The

new-app

command creates a build configuration, which itself creates a new application image from your source code. The

new-app

command typically also creates a

Deployment

object to deploy the new image, and a service to provide load-balanced access to the deployment running your image.

OpenShift Container Platform automatically detects whether the pipeline, source, or docker build strategy should be used, and in the case of source build, detects an appropriate language builder image.

To create an application from a Git repository in a local directory:

$ oc new-app /<path to source code>

If you use a local Git repository, the repository must have a remote named

origin

that points to a URL that is accessible by the OpenShift Container Platform cluster. If there is no recognized remote, running the

new-app

command will create a binary build.

To create an application from a remote Git repository:

$ oc new-app https://github.com/sclorg/cakephp-ex

To create an application from a private remote Git repository:

$ oc new-app https://github.com/youruser/yourprivaterepo --source-secret=yoursecret

If you use a private remote Git repository, you can use the

--source-secret

flag to specify an existing source clone secret that will get injected into your build config to access the repository.

You can use a subdirectory of your source code repository by specifying a

--context-dir

flag. To create an application from a remote Git repository and a context subdirectory:

$ oc new-app https://github.com/sclorg/s2i-ruby-container.git \

--context-dir=2.0/test/puma-test-app

Also, when specifying a remote URL, you can specify a Git branch to use by appending

#<branch_name>

to the end of the URL:

$ oc new-app https://github.com/openshift/ruby-hello-world.git#beta4

3.3.1.3. Build strategy detection

OpenShift Container Platform automatically determines which build strategy to use by detecting certain files:

If a Jenkins file exists in the root or specified context directory of the source repository when creating a new application, OpenShift Container Platform generates a pipeline build strategy.

The

pipeline

build strategy is deprecated; consider using Red Hat OpenShift Pipelines instead.

If a Dockerfile exists in the root or specified context directory of the source repository when creating a new application, OpenShift Container Platform generates a docker build strategy.

If neither a Jenkins file nor a Dockerfile is detected, OpenShift Container Platform generates a source build strategy.

Override the automatically detected build strategy by setting the

--strategy

flag to

docker

,

pipeline

, or

source

.

$ oc new-app /home/user/code/myapp --strategy=docker

The

oc

command requires that files containing build sources are available in a remote Git repository. For all source builds, you must use

git remote -v

.

3.3.1.4. Language detection

If you use the source build strategy,

new-app

attempts to determine the language builder to use by the presence of certain files in the root or specified context directory of the repository:

Table 3.1. Languages detected by new-app

|

Language

|

Files

|

|

dotnet

project.json

,

*.csproj

pom.xml

nodejs

app.json

,

package.json

cpanfile

,

index.pl

composer.json

,

index.php

python

requirements.txt

,

setup.py

Gemfile

,

Rakefile

,

config.ru

scala

build.sbt

golang

Godeps

,

main.go

After a language is detected,

new-app

searches the OpenShift Container Platform server for image stream tags that have a

supports

annotation matching the detected language, or an image stream that matches the name of the detected language. If a match is not found,

new-app

searches the

Docker Hub registry

for an image that matches the detected language based on name.

You can override the image the builder uses for a particular source repository by specifying the image, either an image stream or container specification, and the repository with a

~

as a separator. Note that if this is done, build strategy detection and language detection are not carried out.

For example, to use the

myproject/my-ruby

imagestream with the source in a remote repository:

$ oc new-app myproject/my-ruby~https://github.com/openshift/ruby-hello-world.git

To use the

openshift/ruby-20-centos7:latest

container image stream with the source in a local repository:

$ oc new-app openshift/ruby-20-centos7:latest~/home/user/code/my-ruby-app

Language detection requires the Git client to be locally installed so that your repository can be cloned and inspected. If Git is not available, you can avoid the language detection step by specifying the builder image to use with your repository with the

<image>~<repository>

syntax.

The

-i <image> <repository>

invocation requires that

new-app

attempt to clone

repository

to determine what type of artifact it is, so this will fail if Git is not available.

The

-i <image> --code <repository>

invocation requires

new-app

clone

repository

to determine whether

image

should be used as a builder for the source code, or deployed separately, as in the case of a database image.

3.3.2. Creating an application from an image

You can deploy an application from an existing image. Images can come from image streams in the OpenShift Container Platform server, images in a specific registry, or images in the local Docker server.

The

new-app

command attempts to determine the type of image specified in the arguments passed to it. However, you can explicitly tell

new-app

whether the image is a container image using the

--docker-image

argument or an image stream using the

-i|--image-stream

argument.

If you specify an image from your local Docker repository, you must ensure that the same image is available to the OpenShift Container Platform cluster nodes.

3.3.2.1. Docker Hub MySQL image

Create an application from the Docker Hub MySQL image, for example:

$ oc new-app mysql

3.3.2.2. Image in a private registry

Create an application using an image in a private registry, specify the full container image specification:

$ oc new-app myregistry:5000/example/myimage

3.3.2.3. Existing image stream and optional image stream tag

Create an application from an existing image stream and optional image stream tag:

$ oc new-app my-stream:v1

3.3.3. Creating an application from a template

You can create an application from a previously stored template or from a template file, by specifying the name of the template as an argument. For example, you can store a sample application template and use it to create an application.

Upload an application template to your current project’s template library. The following example uploads an application template from a file called

examples/sample-app/application-template-stibuild.json

:

$ oc create -f examples/sample-app/application-template-stibuild.json

Then create a new application by referencing the application template. In this example, the template name is

ruby-helloworld-sample

:

$ oc new-app ruby-helloworld-sample

To create a new application by referencing a template file in your local file system, without first storing it in OpenShift Container Platform, use the

-f|--file

argument. For example:

$ oc new-app -f examples/sample-app/application-template-stibuild.json

3.3.3.1. Template parameters

When creating an application based on a template, use the

-p|--param

argument to set parameter values that are defined by the template:

$ oc new-app ruby-helloworld-sample \

-p ADMIN_USERNAME=admin -p ADMIN_PASSWORD=mypassword

You can store your parameters in a file, then use that file with

--param-file

when instantiating a template. If you want to read the parameters from standard input, use

--param-file=-

. The following is an example file called

helloworld.params

:

ADMIN_USERNAME=admin

ADMIN_PASSWORD=mypassword

Reference the parameters in the file when instantiating a template:

$ oc new-app ruby-helloworld-sample --param-file=helloworld.params

3.3.4. Modifying application creation

The

new-app

command generates OpenShift Container Platform objects that build, deploy, and run the application that is created. Normally, these objects are created in the current project and assigned names that are derived from the input source repositories or the input images. However, with

new-app

you can modify this behavior.

Table 3.2. new-app output objects

|

Object

|

Description

|

|

BuildConfig

A

BuildConfig

object is created for each source repository that is specified in the command line. The

BuildConfig

object specifies the strategy to use, the source location, and the build output location.

ImageStreams

For the

BuildConfig

object, two image streams are usually created. One represents the input image. With source builds, this is the builder image. With

Docker

builds, this is the

FROM

image. The second one represents the output image. If a container image was specified as input to

new-app

, then an image stream is created for that image as well.

DeploymentConfig

A

DeploymentConfig

object is created either to deploy the output of a build, or a specified image. The

new-app

command creates

emptyDir

volumes for all Docker volumes that are specified in containers included in the resulting

DeploymentConfig

object .

Service

The

new-app

command attempts to detect exposed ports in input images. It uses the lowest numeric exposed port to generate a service that exposes that port. To expose a different port, after

new-app

has completed, simply use the

oc expose

command to generate additional services.

Other

Other objects can be generated when instantiating templates, according to the template.

|

3.3.4.1. Specifying environment variables

When generating applications from a template, source, or an image, you can use the

-e|--env

argument to pass environment variables to the application container at run time:

$ oc new-app openshift/postgresql-92-centos7 \

-e POSTGRESQL_USER=user \

-e POSTGRESQL_DATABASE=db \

-e POSTGRESQL_PASSWORD=password

The variables can also be read from file using the

--env-file

argument. The following is an example file called

postgresql.env

:

POSTGRESQL_USER=user

POSTGRESQL_DATABASE=db

POSTGRESQL_PASSWORD=password

Read the variables from the file:

$ oc new-app openshift/postgresql-92-centos7 --env-file=postgresql.env

Additionally, environment variables can be given on standard input by using

--env-file=-

:

$ cat postgresql.env | oc new-app openshift/postgresql-92-centos7 --env-file=-

Any

BuildConfig

objects created as part of

new-app

processing are not updated with environment variables passed with the

-e|--env

or

--env-file

argument.

3.3.4.2. Specifying build environment variables

When generating applications from a template, source, or an image, you can use the

--build-env

argument to pass environment variables to the build container at run time:

$ oc new-app openshift/ruby-23-centos7 \

--build-env HTTP_PROXY=http://myproxy.net:1337/ \

--build-env GEM_HOME=~/.gem

The variables can also be read from a file using the

--build-env-file

argument. The following is an example file called

ruby.env

:

HTTP_PROXY=http://myproxy.net:1337/

GEM_HOME=~/.gem

Read the variables from the file:

$ oc new-app openshift/ruby-23-centos7 --build-env-file=ruby.env

Additionally, environment variables can be given on standard input by using

--build-env-file=-

:

$ cat ruby.env | oc new-app openshift/ruby-23-centos7 --build-env-file=-

3.3.4.3. Specifying labels

When generating applications from source, images, or templates, you can use the

-l|--label

argument to add labels to the created objects. Labels make it easy to collectively select, configure, and delete objects associated with the application.

$ oc new-app https://github.com/openshift/ruby-hello-world -l name=hello-world

3.3.4.4. Viewing the output without creation

To see a dry-run of running the

new-app

command, you can use the

-o|--output

argument with a

yaml

or

json

value. You can then use the output to preview the objects that are created or redirect it to a file that you can edit. After you are satisfied, you can use

oc create

to create the OpenShift Container Platform objects.

To output

new-app

artifacts to a file, run the following:

$ oc new-app https://github.com/openshift/ruby-hello-world \

-o yaml > myapp.yaml

Edit the file:

$ vi myapp.yaml

Create a new application by referencing the file:

$ oc create -f myapp.yaml

3.3.4.5. Creating objects with different names

Objects created by

new-app

are normally named after the source repository, or the image used to generate them. You can set the name of the objects produced by adding a

--name

flag to the command:

$ oc new-app https://github.com/openshift/ruby-hello-world --name=myapp

3.3.4.6. Creating objects in a different project

Normally,

new-app

creates objects in the current project. However, you can create objects in a different project by using the

-n|--namespace

argument:

$ oc new-app https://github.com/openshift/ruby-hello-world -n myproject

3.3.4.7. Creating multiple objects

The

new-app

command allows creating multiple applications specifying multiple parameters to

new-app

. Labels specified in the command line apply to all objects created by the single command. Environment variables apply to all components created from source or images.

To create an application from a source repository and a Docker Hub image:

$ oc new-app https://github.com/openshift/ruby-hello-world mysql

If a source code repository and a builder image are specified as separate arguments,

new-app

uses the builder image as the builder for the source code repository. If this is not the intent, specify the required builder image for the source using the

~

separator.

3.3.4.8. Grouping images and source in a single pod

The

new-app

command allows deploying multiple images together in a single pod. To specify which images to group together, use the

+

separator. The

--group

command line argument can also be used to specify the images that should be grouped together. To group the image built from a source repository with other images, specify its builder image in the group:

$ oc new-app ruby+mysql

To deploy an image built from source and an external image together:

$ oc new-app \

ruby~https://github.com/openshift/ruby-hello-world \

mysql \

--group=ruby+mysql

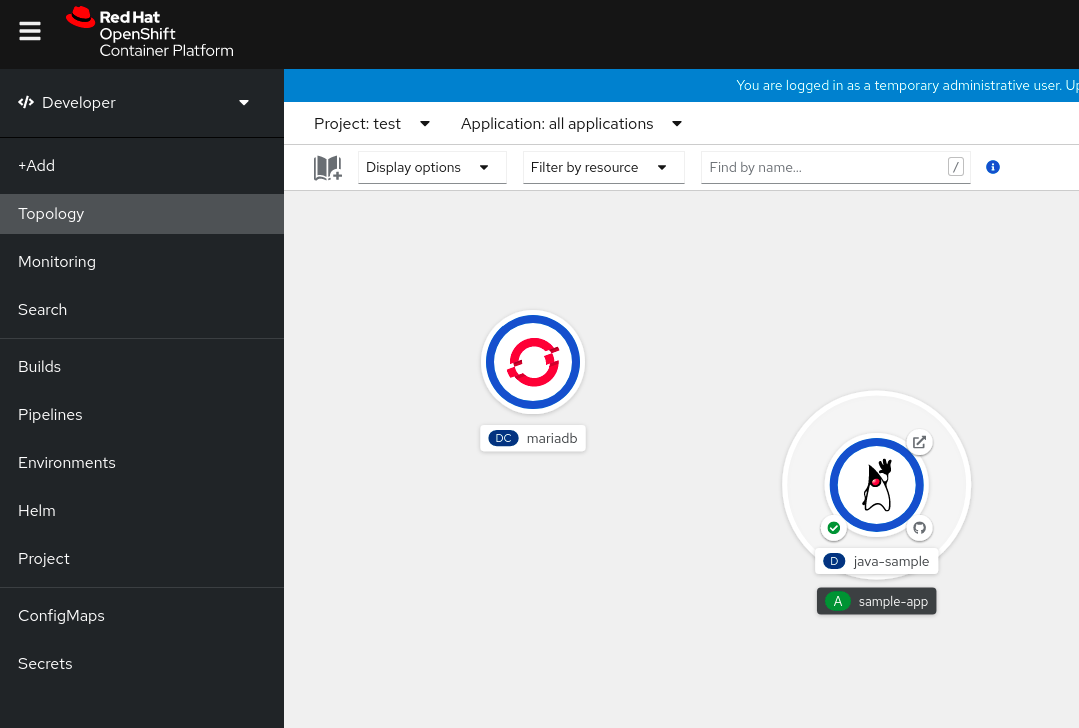

Chapter 4. Viewing application composition by using the Topology view

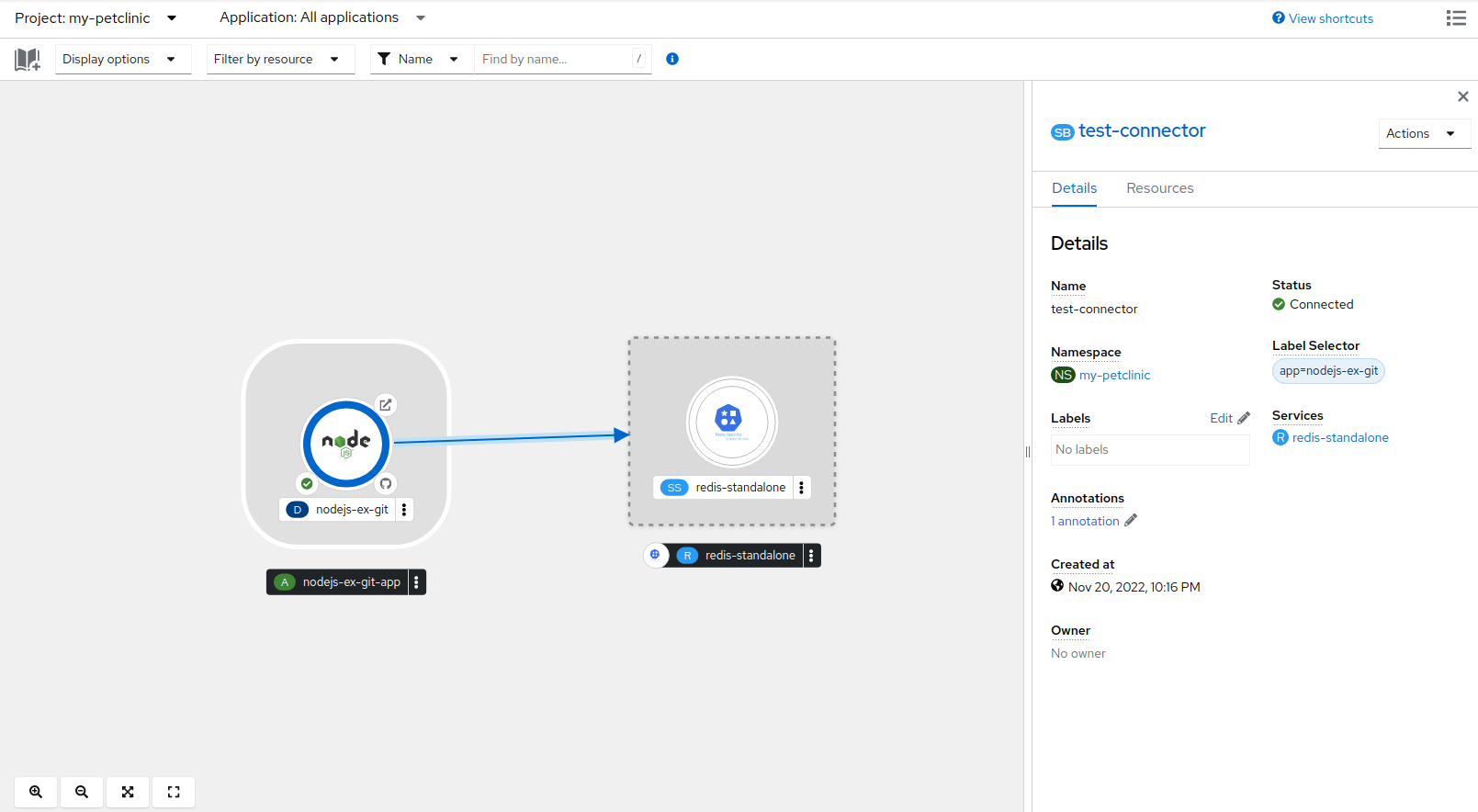

The

Topology

view in the

Developer

perspective of the web console provides a visual representation of all the applications within a project, their build status, and the components and services associated with them.

4.2. Viewing the topology of your application

You can navigate to the

Topology

view using the left navigation panel in the

Developer

perspective. After you deploy an application, you are directed automatically to the

Graph view

where you can see the status of the application pods, quickly access the application on a public URL, access the source code to modify it, and see the status of your last build. You can zoom in and out to see more details for a particular application.

The

Topology

view provides you the option to monitor your applications using the

List

view. Use the

List view

icon (

) to see a list of all your applications and use the

Graph view

icon (

) to switch back to the graph view.

You can customize the views as required using the following:

Use the

Find by name

field to find the required components. Search results may appear outside of the visible area; click

Fit to Screen

from the lower-left toolbar to resize the

Topology

view to show all components.

Use the

Display Options

drop-down list to configure the

Topology

view of the various application groupings. The options are available depending on the types of components deployed in the project:

Expand

group

Virtual Machines: Toggle to show or hide the virtual machines.

Application Groupings: Clear to condense the application groups into cards with an overview of an application group and alerts associated with it.

Helm Releases: Clear to condense the components deployed as Helm Release into cards with an overview of a given release.

Knative Services: Clear to condense the Knative Service components into cards with an overview of a given component.

Operator Groupings: Clear to condense the components deployed with an Operator into cards with an overview of the given group.

Show

elements based on

Pod Count

or

Labels

Pod Count: Select to show the number of pods of a component in the component icon.

Labels: Toggle to show or hide the component labels.

The

Topology

view also provides you the

Export application

option to download your application in the ZIP file format. You can then import the downloaded application to another project or cluster. For more details, see

Exporting an application to another project or cluster

in the

Additional resources

section.

4.3. Interacting with applications and components

In the

Topology

view in the

Developer

perspective of the web console, the

Graph view

provides the following options to interact with applications and components:

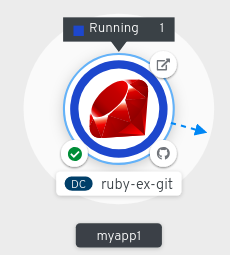

Click

Open URL

(

) to see your application exposed by the route on a public URL.

Click

Edit Source code

to access your source code and modify it.

This feature is available only when you create applications using the

From Git

,

From Catalog

, and the

From Dockerfile

options.

Hover your cursor over the lower left icon on the pod to see the name of the latest build and its status. The status of the application build is indicated as

New

(

),

Pending

(

),

Running

(

),

Completed

(

),

Failed

(

), and

Canceled

(

The status or phase of the pod is indicated by different colors and tooltips as:

Running

(

): The pod is bound to a node and all of the containers are created. At least one container is still running or is in the process of starting or restarting.

Not Ready

(

): The pods which are running multiple containers, not all containers are ready.

Warning

(

): Containers in pods are being terminated, however termination did not succeed. Some containers may be other states.

Failed

(

): All containers in the pod terminated but least one container has terminated in failure. That is, the container either exited with non-zero status or was terminated by the system.

Pending

(

): The pod is accepted by the Kubernetes cluster, but one or more of the containers has not been set up and made ready to run. This includes time a pod spends waiting to be scheduled as well as the time spent downloading container images over the network.

Succeeded

(

): All containers in the pod terminated successfully and will not be restarted.

Terminating

(

): When a pod is being deleted, it is shown as

Terminating

by some kubectl commands.

Terminating

status is not one of the pod phases. A pod is granted a graceful termination period, which defaults to 30 seconds.

Unknown

(

): The state of the pod could not be obtained. This phase typically occurs due to an error in communicating with the node where the pod should be running.

After you create an application and an image is deployed, the status is shown as

Pending

. After the application is built, it is displayed as

Running

.

The application resource name is appended with indicators for the different types of resource objects as follows:

CJ

:

CronJob

D

:

Deployment

DC

:

DeploymentConfig

DS

:

DaemonSet

J

:

Job

P

:

Pod

SS

:

StatefulSet

(Knative): A serverless application

Serverless applications take some time to load and display on the

Graph view

. When you deploy a serverless application, it first creates a service resource and then a revision. After that, it is deployed and displayed on the

Graph view

. If it is the only workload, you might be redirected to the

Add

page. After the revision is deployed, the serverless application is displayed on the

Graph view

.

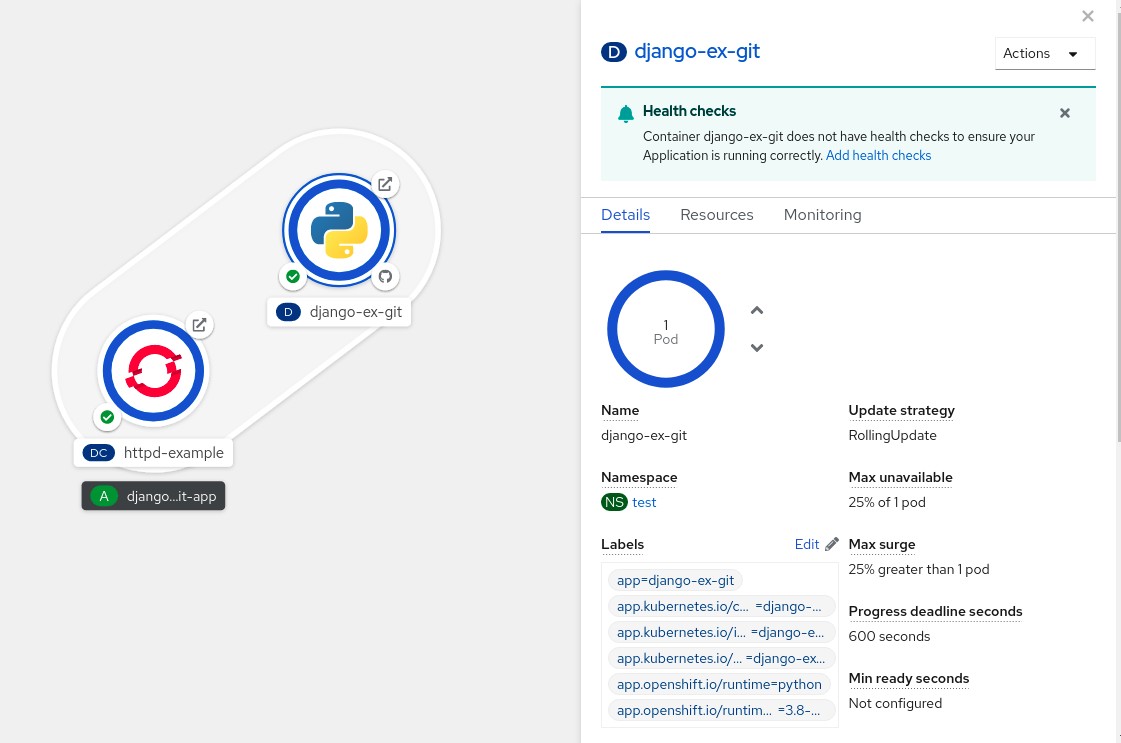

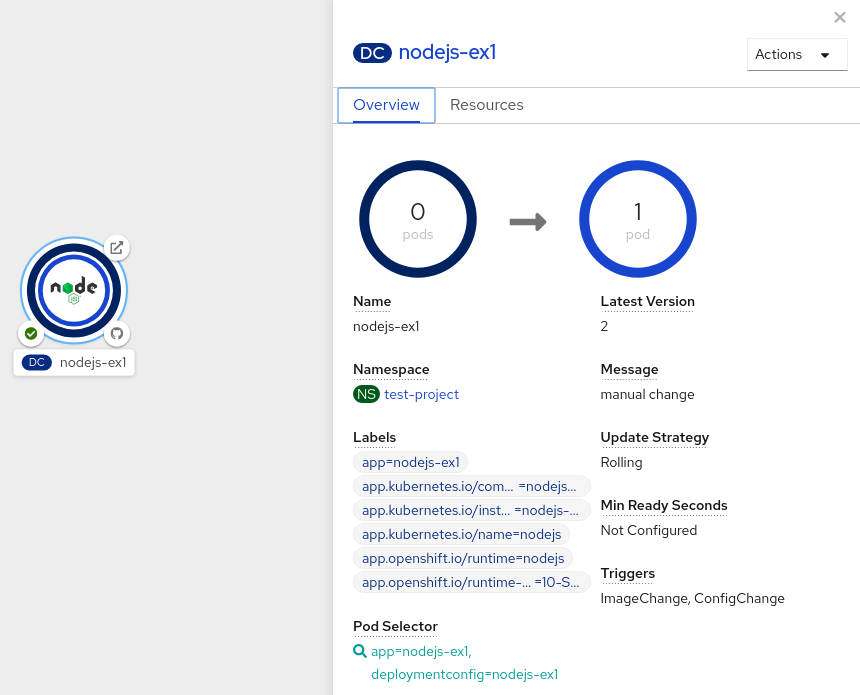

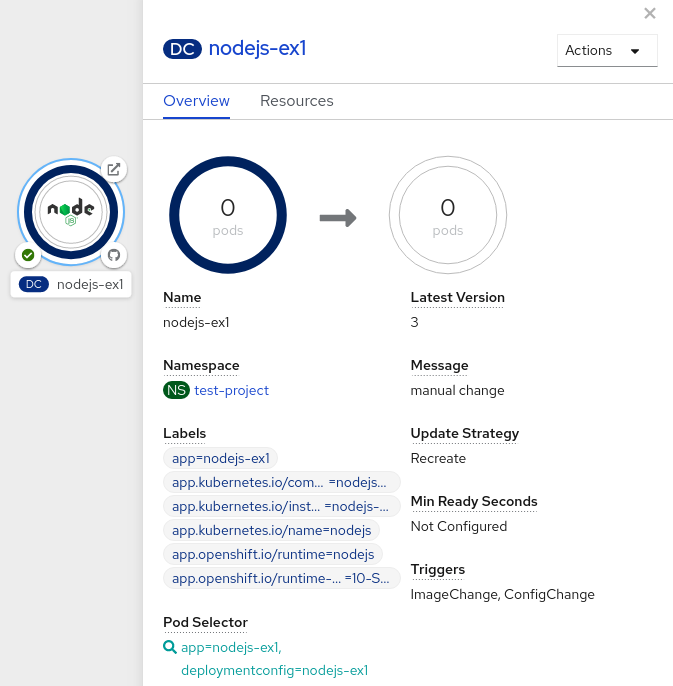

4.4. Scaling application pods and checking builds and routes

The

Topology

view provides the details of the deployed components in the

Overview

panel. You can use the

Overview

and

Details

tabs to scale the application pods, check build status, services, and routes as follows:

Click on the component node to see the

Overview

panel to the right. Use the

Details

tab to:

Scale your pods using the up and down arrows to increase or decrease the number of instances of the application manually. For serverless applications, the pods are automatically scaled down to zero when idle and scaled up depending on the channel traffic.

Check the

Labels

,

Annotations

, and

Status

of the application.

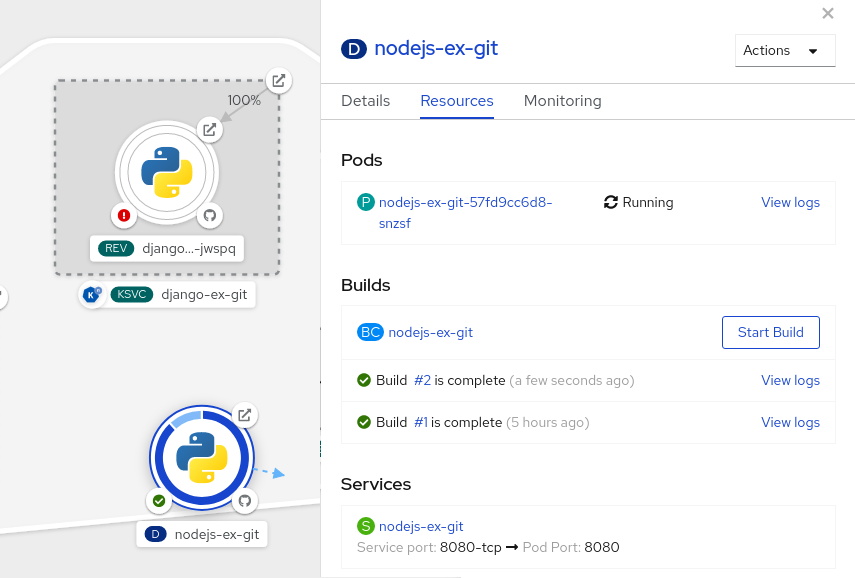

Click the

Resources

tab to:

See the list of all the pods, view their status, access logs, and click on the pod to see the pod details.

See the builds, their status, access logs, and start a new build if needed.

See the services and routes used by the component.

For serverless applications, the

Resources

tab provides information on the revision, routes, and the configurations used for that component.

4.5. Adding components to an existing project

You can add components to a project.

Procedure

-

Navigate to the

+Add

view.

Click

Add to Project

(

) next to left navigation pane or press

Ctrl

+

Space

Search for the component and click the

Start

/

Create

/

Install

button or click

Enter

to add the component to the project and see it in the topology

Graph view

.

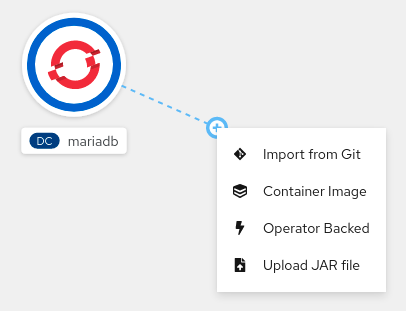

Alternatively, you can also use the available options in the context menu, such as

Import from Git

,

Container Image

,

Database

,

From Catalog

,

Operator Backed

,

Helm Charts

,

Samples

, or

Upload JAR file

, by right-clicking in the topology

Graph view

to add a component to your project.

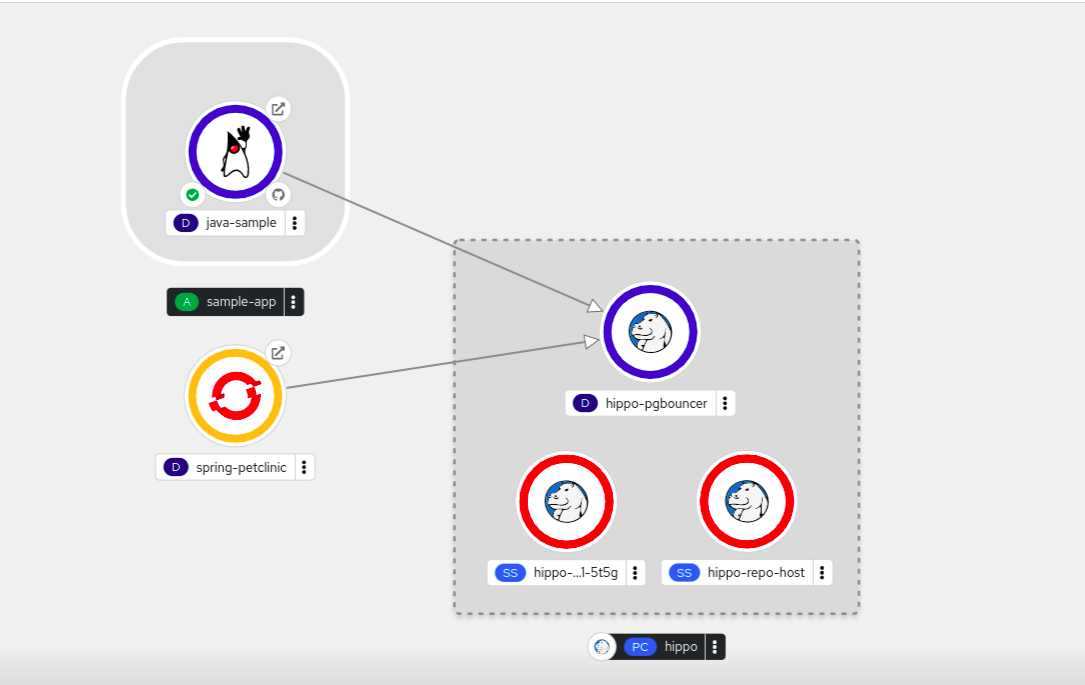

4.6. Grouping multiple components within an application

You can use the

+Add

view to add multiple components or services to your project and use the topology

Graph view

to group applications and resources within an application group.

Prerequisites

-

You have created and deployed minimum two or more components on OpenShift Container Platform using the

Developer

perspective.

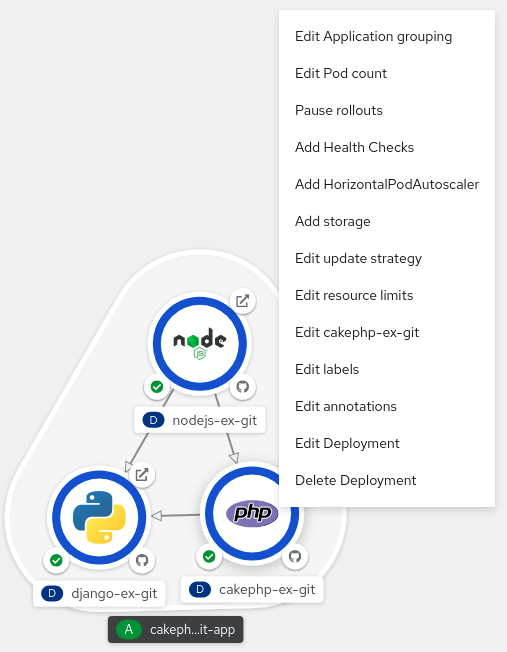

Alternatively, you can also add the component to an application as follows:

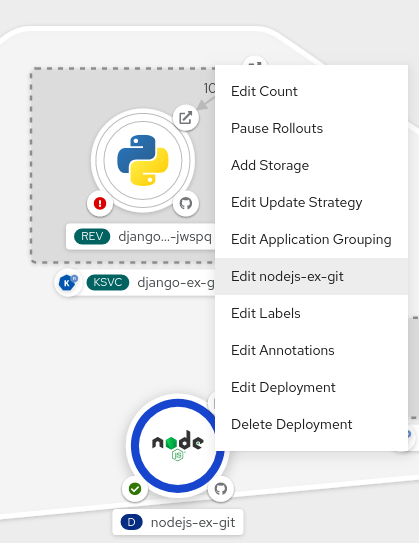

Click the service pod to see the

Overview

panel to the right.

Click the

Actions

drop-down menu and select

Edit Application Grouping

.

In the

Edit Application Grouping

dialog box, click the

Application

drop-down list, and select an appropriate application group.

Click

Save

to add the service to the application group.

You can remove a component from an application group by selecting the component and using

Shift

+ drag to drag it out of the application group.

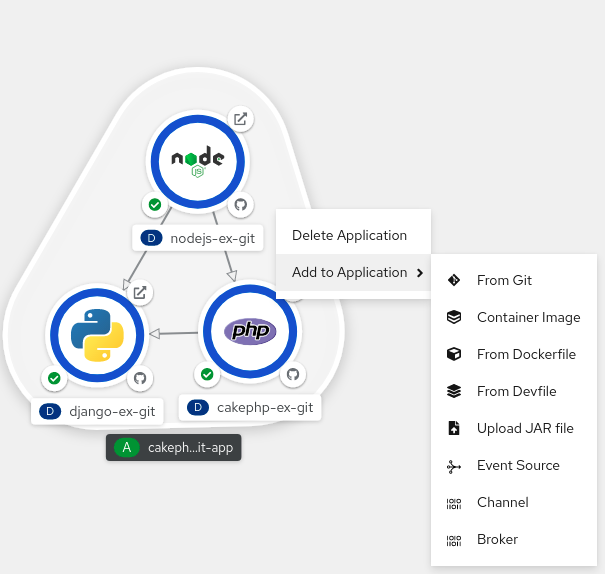

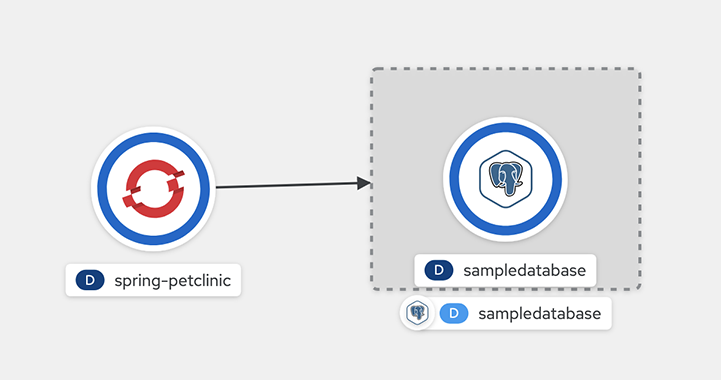

4.7. Adding services to your application

To add a service to your application use the

+Add

actions using the context menu in the topology

Graph view

.

In addition to the context menu, you can add services by using the sidebar or hovering and dragging the dangling arrow from the application group.

Procedure

-

Right-click an application group in the topology

Graph view

to display the context menu.

-

Use

Add to Application

to select a method for adding a service to the application group, such as

From Git

,

Container Image

,

From Dockerfile

,

From Devfile

,

Upload JAR file

,

Event Source

,

Channel

, or

Broker

.

Complete the form for the method you choose and click

Create

. For example, to add a service based on the source code in your Git repository, choose the

From Git

method, fill in the

Import from Git

form, and click

Create

.

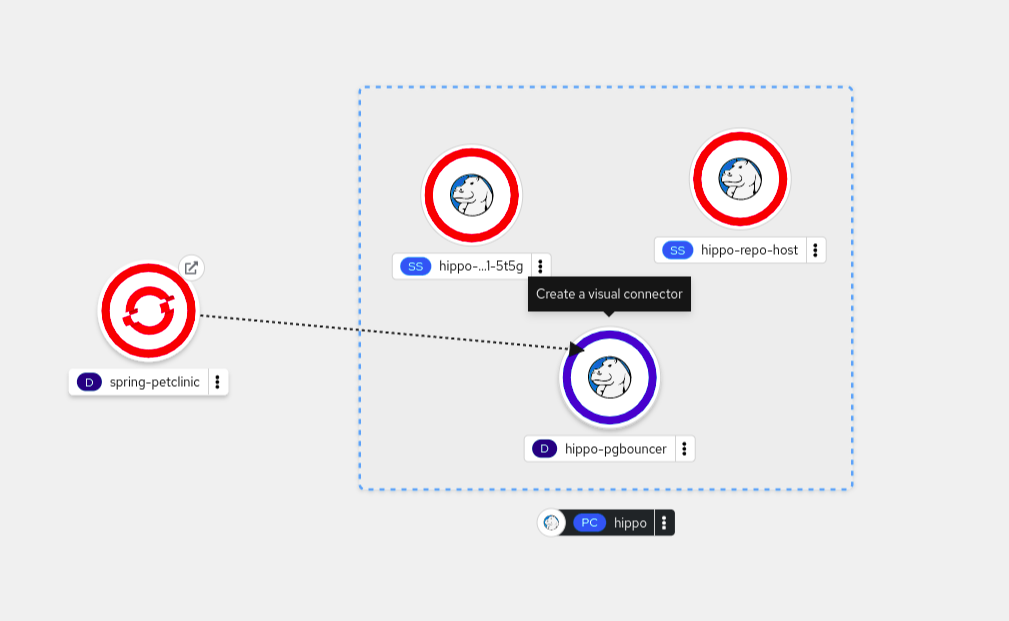

4.8. Removing services from your application

In the topology

Graph view

remove a service from your application using the context menu.

Procedure

-

Right-click on a service in an application group in the topology

Graph view

to display the context menu.

Select

Delete Deployment

to delete the service.

4.9. Labels and annotations used for the Topology view

The

Topology

view uses the following labels and annotations:

-

Icon displayed in the node

-

Icons in the node are defined by looking for matching icons using the

app.openshift.io/runtime

label, followed by the

app.kubernetes.io/name

label. This matching is done using a predefined set of icons.

-

Link to the source code editor or the source

-

The

app.openshift.io/vcs-uri

annotation is used to create links to the source code editor.

-

Node Connector

-

The

app.openshift.io/connects-to

annotation is used to connect the nodes.

-

App grouping

-

The

app.kubernetes.io/part-of=<appname>

label is used to group the applications, services, and components.

For detailed information on the labels and annotations OpenShift Container Platform applications must use, see

Guidelines for labels and annotations for OpenShift applications

.

4.10. Additional resources

Chapter 5. Exporting applications

As a developer, you can export your application in the ZIP file format. Based on your needs, import the exported application to another project in the same cluster or a different cluster by using the

Import YAML

option in the

+Add

view. Exporting your application helps you to reuse your application resources and saves your time.

-

In the developer perspective, perform one of the following steps:

Navigate to the

+Add

view and click

Export application

in the

Application portability

tile.

Navigate to the

Topology

view and click

Export application

.

Click

OK

in the

Export Application

dialog box. A notification opens to confirm that the export of resources from your project has started.

Optional steps that you might need to perform in the following scenarios:

If you have started exporting an incorrect application, click

Export application

→

Cancel Export

.

If your export is already in progress and you want to start a fresh export, click

Export application

→

Restart Export

.

If you want to view logs associated with exporting an application, click

Export application

and the

View Logs

link.

After a successful export, click

Download

in the dialog box to download application resources in ZIP format onto your machine.

Chapter 6. Connecting applications to services

6.1. Release notes for Service Binding Operator

The Service Binding Operator consists of a controller and an accompanying custom resource definition (CRD) for service binding. It manages the data plane for workloads and backing services. The Service Binding Controller reads the data made available by the control plane of backing services. Then, it projects this data to workloads according to the rules specified through the

ServiceBinding

resource.

With Service Binding Operator, you can:

Bind your workloads together with Operator-managed backing services.

Automate configuration of binding data.

Provide service operators a low-touch administrative experience to provision and manage access to services.

Enrich development lifecycle with a consistent and declarative service binding method that eliminates discrepancies in cluster environments.

The custom resource definition (CRD) of the Service Binding Operator supports the following APIs:

Service Binding

with the

binding.operators.coreos.com

API group.

Service Binding (Spec API)

with the

servicebinding.io

API group.

Some features in the following table are in

Technology Preview

. These experimental features are not intended for production use.

In the table, features are marked with the following statuses:

TP

:

Technology Preview

GA

:

General Availability

Note the following scope of support on the Red Hat Customer Portal for these features:

Table 6.1. Support matrix

|

Service Binding Operator

|

API Group and Support Status

|

OpenShift Versions

|

|

Version

binding.operators.coreos.com

servicebinding.io

1.3.3

4.9-4.12

1.3.1

4.9-4.11

4.9-4.11

4.7-4.11

1.1.1

4.7-4.10

4.7-4.10

1.0.1

4.7-4.9

4.7-4.9

|

6.1.2. Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see

Red Hat CTO Chris Wright’s message

.

6.1.3. Release notes for Service Binding Operator 1.3.3

Service Binding Operator 1.3.3 is now available on OpenShift Container Platform 4.9, 4.10, 4.11 and 4.12.

-

Before this update, a security vulnerability

CVE-2022-41717

was noted for Service Binding Operator. This update fixes the

CVE-2022-41717

error and updates the

golang.org/x/net

package from v0.0.0-20220906165146-f3363e06e74c to v0.4.0.

APPSVC-1256

Before this update, Provisioned Services were only detected if the respective resource had the "servicebinding.io/provisioned-service: true" annotation set while other Provisioned Services were missed. With this update, the detection mechanism identifies all Provisioned Services correctly based on the "status.binding.name" attribute.

APPSVC-1204

6.1.4. Release notes for Service Binding Operator 1.3.1

Service Binding Operator 1.3.1 is now available on OpenShift Container Platform 4.9, 4.10, and 4.11.

-

Before this update, a security vulnerability

CVE-2022-32149

was noted for Service Binding Operator. This update fixes the

CVE-2022-32149

error and updates the

golang.org/x/text

package from v0.3.7 to v0.3.8.

APPSVC-1220

6.1.5. Release notes for Service Binding Operator 1.3

Service Binding Operator 1.3 is now available on OpenShift Container Platform 4.9, 4.10, and 4.11.

6.1.5.1. Removed functionality

-

In Service Binding Operator 1.3, the Operator Lifecycle Manager (OLM) descriptor feature has been removed to improve resource utilization. As an alternative to OLM descriptors, you can use CRD annotations to declare binding data.

6.1.6. Release notes for Service Binding Operator 1.2

Service Binding Operator 1.2 is now available on OpenShift Container Platform 4.7, 4.8, 4.9, 4.10, and 4.11.

This section highlights what is new in Service Binding Operator 1.2:

Enable Service Binding Operator to consider optional fields in the annotations by setting the

optional

flag value to

true

.

Support for

servicebinding.io/v1beta1

resources.

Improvements to the discoverability of bindable services by exposing the relevant binding secret without requiring a workload to be present.

-

Currently, when you install Service Binding Operator on OpenShift Container Platform 4.11, the memory footprint of Service Binding Operator increases beyond expected limits. With low usage, however, the memory footprint stays within the expected ranges of your environment or scenarios. In comparison with OpenShift Container Platform 4.10, under stress, both the average and maximum memory footprint increase considerably. This issue is evident in the previous versions of Service Binding Operator as well. There is currently no workaround for this issue.

APPSVC-1200

By default, the projected files get their permissions set to 0644. Service Binding Operator cannot set specific permissions due to a bug in Kubernetes that causes issues if the service expects specific permissions such as,

0600

. As a workaround, you can modify the code of the program or the application that is running inside a workload resource to copy the file to the

/tmp

directory and set the appropriate permissions.

APPSVC-1127

There is currently a known issue with installing Service Binding Operator in a single namespace installation mode. The absence of an appropriate namespace-scoped role-based access control (RBAC) rule prevents the successful binding of an application to a few known Operator-backed services that the Service Binding Operator can automatically detect and bind to. When this happens, it generates an error message similar to the following example:

6.1.7. Release notes for Service Binding Operator 1.1.1

Service Binding Operator 1.1.1 is now available on OpenShift Container Platform 4.7, 4.8, 4.9, and 4.10.

-

Before this update, a security vulnerability

CVE-2021-38561

was noted for Service Binding Operator Helm chart. This update fixes the

CVE-2021-38561

error and updates the

golang.org/x/text

package from v0.3.6 to v0.3.7.

APPSVC-1124

Before this update, users of the Developer Sandbox did not have sufficient permissions to read

ClusterWorkloadResourceMapping

resources. As a result, Service Binding Operator prevented all service bindings from being successful. With this update, the Service Binding Operator now includes the appropriate role-based access control (RBAC) rules for any authenticated subject including the Developer Sandbox users. These RBAC rules allow the Service Binding Operator to

get

,

list

, and

watch

the

ClusterWorkloadResourceMapping

resources for the Developer Sandbox users and to process service bindings successfully.

APPSVC-1135

-

There is currently a known issue with installing Service Binding Operator in a single namespace installation mode. The absence of an appropriate namespace-scoped role-based access control (RBAC) rule prevents the successful binding of an application to a few known Operator-backed services that the Service Binding Operator can automatically detect and bind to. When this happens, it generates an error message similar to the following example:

6.1.8. Release notes for Service Binding Operator 1.1

Service Binding Operator is now available on OpenShift Container Platform 4.7, 4.8, 4.9, and 4.10.

This section highlights what is new in Service Binding Operator 1.1:

Service Binding Options

Workload resource mapping: Define exactly where binding data needs to be projected for the secondary workloads.

Bind new workloads using a label selector.

-

Before this update, service bindings that used label selectors to pick up workloads did not project service binding data into the new workloads that matched the given label selectors. As a result, the Service Binding Operator could not periodically bind such new workloads. With this update, service bindings now project service binding data into the new workloads that match the given label selector. The Service Binding Operator now periodically attempts to find and bind such new workloads.

APPSVC-1083

-

There is currently a known issue with installing Service Binding Operator in a single namespace installation mode. The absence of an appropriate namespace-scoped role-based access control (RBAC) rule prevents the successful binding of an application to a few known Operator-backed services that the Service Binding Operator can automatically detect and bind to. When this happens, it generates an error message similar to the following example:

6.1.9. Release notes for Service Binding Operator 1.0.1

Service Binding Operator is now available on OpenShift Container Platform 4.7, 4.8 and 4.9.

Service Binding Operator 1.0.1 supports OpenShift Container Platform 4.9 and later running on:

IBM Power Systems

IBM Z and LinuxONE

The custom resource definition (CRD) of the Service Binding Operator 1.0.1 supports the following APIs:

Service Binding

with the

binding.operators.coreos.com

API group.

Service Binding (Spec API Tech Preview)

with the

servicebinding.io

API group.

Service Binding (Spec API Tech Preview)

with the

servicebinding.io

API group is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see

Technology Preview Features Support Scope

.

Some features in this release are currently in Technology Preview. These experimental features are not intended for production use.

Technology Preview Features Support Scope

In the table below, features are marked with the following statuses:

TP

:

Technology Preview

GA

:

General Availability

Note the following scope of support on the Red Hat Customer Portal for these features:

Table 6.2. Support matrix

|

Feature

|

Service Binding Operator 1.0.1

|

|

binding.operators.coreos.com

API group

servicebinding.io

API group

|

-

Before this update, binding the data values from a

Cluster

custom resource (CR) of the

postgresql.k8s.enterpriesedb.io/v1

API collected the

host

binding value from the

.metadata.name

field of the CR. The collected binding value is an incorrect hostname and the correct hostname is available at the

.status.writeService

field. With this update, the annotations that the Service Binding Operator uses to expose the binding data values from the backing service CR are now modified to collect the

host

binding value from the

.status.writeService

field. The Service Binding Operator uses these modified annotations to project the correct hostname in the

host

and

provider

bindings.

APPSVC-1040

Before this update, when you would bind a

PostgresCluster

CR of the

postgres-operator.crunchydata.com/v1beta1

API, the binding data values did not include the values for the database certificates. As a result, the application failed to connect to the database. With this update, modifications to the annotations that the Service Binding Operator uses to expose the binding data from the backing service CR now include the database certificates. The Service Binding Operator uses these modified annotations to project the correct

ca.crt

,

tls.crt

, and

tls.key

certificate files.

APPSVC-1045

Before this update, when you would bind a

PerconaXtraDBCluster

custom resource (CR) of the

pxc.percona.com

API, the binding data values did not include the

port

and

database

values. These binding values along with the others already projected are necessary for an application to successfully connect to the database service. With this update, the annotations that the Service Binding Operator uses to expose the binding data values from the backing service CR are now modified to project the additional

port

and

database

binding values. The Service Binding Operator uses these modified annotations to project the complete set of binding values that the application can use to successfully connect to the database service.

APPSVC-1073

-

Currently, when you install the Service Binding Operator in the single namespace installation mode, the absence of an appropriate namespace-scoped role-based access control (RBAC) rule prevents the successful binding of an application to a few known Operator-backed services that the Service Binding Operator can automatically detect and bind to. In addition, the following error message is generated:

6.1.10. Release notes for Service Binding Operator 1.0

Service Binding Operator is now available on OpenShift Container Platform 4.7, 4.8 and 4.9.

The custom resource definition (CRD) of the Service Binding Operator 1.0 supports the following APIs:

Service Binding

with the

binding.operators.coreos.com

API group.

Service Binding (Spec API Tech Preview)

with the

servicebinding.io

API group.

Service Binding (Spec API Tech Preview)

with the

servicebinding.io

API group is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see

Technology Preview Features Support Scope

.

Some features in this release are currently in Technology Preview. These experimental features are not intended for production use.

Technology Preview Features Support Scope

In the table below, features are marked with the following statuses:

TP

:

Technology Preview

GA

:

General Availability

Note the following scope of support on the Red Hat Customer Portal for these features:

Table 6.3. Support matrix

|

Feature

|

Service Binding Operator 1.0

|

|

binding.operators.coreos.com

API group

servicebinding.io

API group

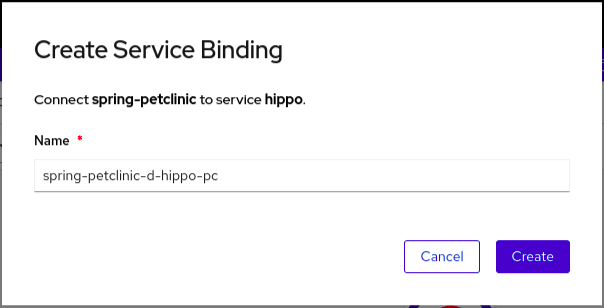

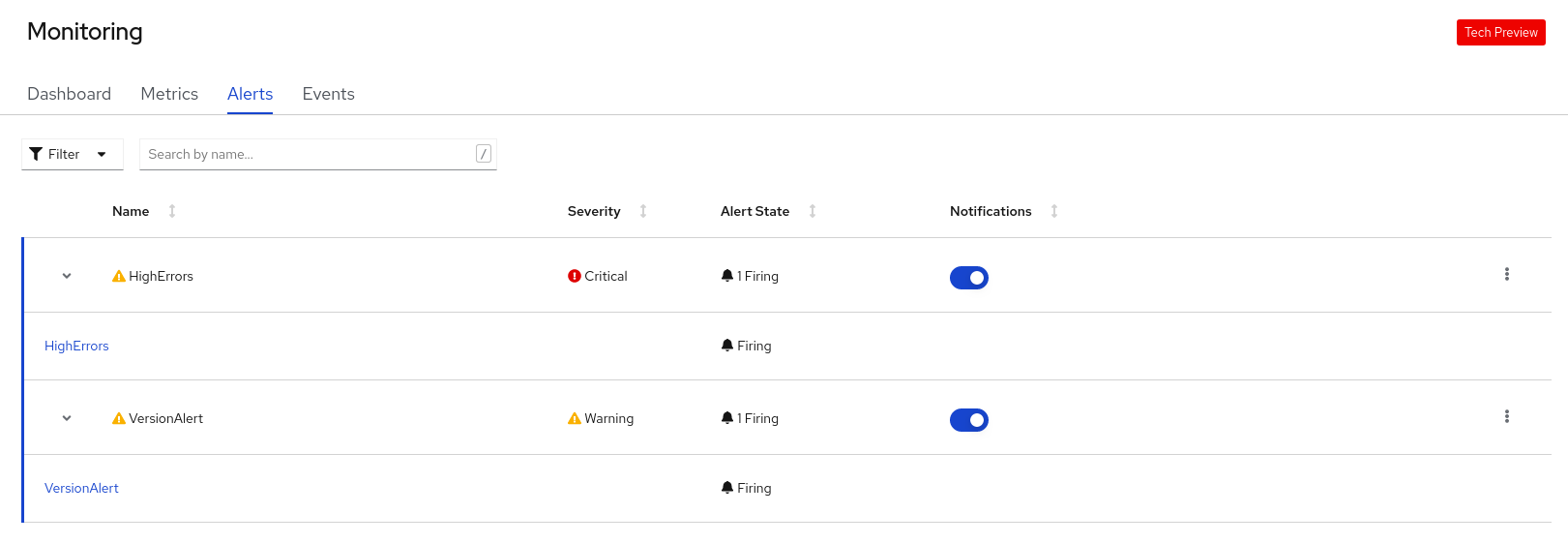

|